Data generation¶

First, we generate a dataset with the peerannot simulate command. This dataset has 30 workers, 200 tasks for 5 classes. Each task receives 10 votes.

[1]:

from pathlib import Path

path = (Path() / ".." / "_build" / "notebooks")

path.mkdir(exist_ok=True, parents=True)

! peerannot simulate --n-worker=30 --n-task=200 --n-classes=5 \

--strategy independent-confusion \

--feedback=10 --seed 0 \

--folder ../_build/notebooks/

Saved answers at ../_build/notebooks/answers.json

Saved ground truth at ../_build/notebooks/ground_truth.npy

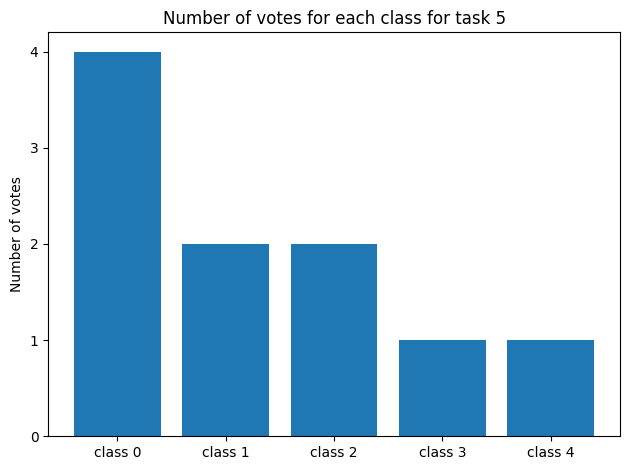

We can visualize the generated votes and the true labels of the tasks. For example let us consider task 5:

[2]:

import json

import numpy as np

import matplotlib.pyplot as plt

with open(path / "answers.json") as f:

answers = json.load(f)

gt = np.load(path / "ground_truth.npy")

print("Task 5:", answers["5"])

print("Number of votes:", len(answers["5"]))

print("Ground truth:", gt[5])

fig, ax = plt.subplots()

counts = np.bincount(list(answers["5"].values()), minlength=5)

classes = [f"class {str(i)}" for i in [0, 1, 2, 3, 4]]

ax.bar(classes, counts)

plt.yticks(range(0, max(counts)+1))

ax.set_ylabel("Number of votes")

ax.set_title("Number of votes for each class for task 5")

plt.tight_layout()

plt.show()

Task 5: {'2': 1, '3': 0, '4': 0, '5': 3, '9': 0, '11': 1, '15': 2, '17': 4, '21': 0, '26': 2}

Number of votes: 10

Ground truth: 0

Command Line Aggregation¶

Let us run some aggregation methods on the dataset we just generated using the command line interface.

[3]:

for strat in ["MV", "NaiveSoft", "DS", "GLAD", "DSWC[L=5]", "Wawa"]:

! peerannot aggregate ../_build/notebooks/ -s {strat}

Running aggregation mv with options {}

Aggregated labels stored at /home/circleci/project/doc/_build/notebooks/labels/labels_independent-confusion_mv.npy with shape (200,)

Running aggregation naivesoft with options {}

Aggregated labels stored at /home/circleci/project/doc/_build/notebooks/labels/labels_independent-confusion_naivesoft.npy with shape (200, 5)

Running aggregation ds with options {}

Finished: 40%|████████████▊ | 20/50 [00:00<00:00, 136.89it/s]

Aggregated labels stored at /home/circleci/project/doc/_build/notebooks/labels/labels_independent-confusion_ds.npy with shape (200, 5)

Running aggregation glad with options {}

- Running EM

Finished: 74%|████████████████████████▍ | 37/50 [00:31<00:11, 1.16it/s]

Task difficulty coefficients saved at /home/circleci/project/doc/_build/notebooks/identification/glad/difficulties.npy

Worker ability coefficients saved at /home/circleci/project/doc/_build/notebooks/identification/glad/abilities.npy

Aggregated labels stored at /home/circleci/project/doc/_build/notebooks/labels/labels_independent-confusion_glad.npy with shape (200, 5)

Running aggregation dswc with options {'L': 5}

Aggregated labels stored at /home/circleci/project/doc/_build/notebooks/labels/labels_independent-confusion_dswc[l=5].npy with shape (200, 5)

Running aggregation wawa with options {}

/home/circleci/project/peerannot/models/aggregation/Wawa.py:59: UserWarning:

Wawa aggregation only returns hard labels.

Defaulting to ``get_answers()``.

warnings.warn(

Aggregated labels stored at /home/circleci/project/doc/_build/notebooks/labels/labels_independent-confusion_wawa.npy with shape (200,)

Now, as we know the ground truth we can evaluate the performance of the aggregation methods. In this example we consider the accuracy. Other metrics such as F1-scores, precision, recall, etc. can be used.

[4]:

import pandas as pd

def accuracy(labels, gt):

return np.mean(labels == gt) if labels.ndim == 1 else np.mean(np.argmax(labels, axis=1) == gt)

results = { # initialize results dictionary

"mv": [],

"naivesoft": [],

"glad": [],

"ds": [],

"wawa": [],

"dswc[l=5]": [],

}

for strategy in results.keys():

path_labels = path / "labels" / f"labels_independent-confusion_{strategy}.npy"

labels = np.load(path_labels) # load aggregated labels

results[strategy].append(accuracy(labels, gt)) # compute accuracy

results["NS"] = results["naivesoft"] # rename naivesoft to NS

results.pop("naivesoft")

# Styling the results

results = pd.DataFrame(results, index=["AccTrain"])

results.columns = map(str.upper, results.columns)

results = results.style.set_table_styles(

[dict(selector="th", props=[("text-align", "center")])]

)

results.set_properties(**{"text-align": "center"})

results = results.format(precision=3)

results

[4]:

| MV | GLAD | DS | WAWA | DSWC[L=5] | NS | |

|---|---|---|---|---|---|---|

| AccTrain | 0.760 | 0.780 | 0.890 | 0.775 | 0.775 | 0.760 |

API Aggregation¶

We showed how to use the command line interface, but what about the API? It’s just as simple!

[5]:

from peerannot.models import agg_strategies

strategies = ["MV", "GLAD", "DS", "NaiveSoft", "DSWC", "Wawa"]

yhats = []

for strat in strategies:

agg = agg_strategies[strat]

if strat != "DSWC":

agg = agg(answers, n_classes=5, n_workers=30, n_tasks=200, dataset=path)

else:

agg = agg(answers, L=5, n_classes=5, n_workers=30, n_tasks=200)

if hasattr(agg, "run"):

agg.run(maxiter=20)

yhats.append(agg.get_answers())

/home/circleci/miniconda/lib/python3.10/site-packages/tqdm/auto.py:21: TqdmWarning: IProgress not found. Please update jupyter and ipywidgets. See https://ipywidgets.readthedocs.io/en/stable/user_install.html

from .autonotebook import tqdm as notebook_tqdm

- Running EM

Finished: : 21it [00:18, 1.13it/s]

Task difficulty coefficients saved at /home/circleci/project/doc/_build/notebooks/identification/glad/difficulties.npy

Worker ability coefficients saved at /home/circleci/project/doc/_build/notebooks/identification/glad/abilities.npy

Finished: 100% 20/20 [00:00<00:00, 135.75it/s]

[6]:

results = { # initialize results dictionary

"mv": [],

"glad": [],

"ds": [],

"naivesoft": [],

"dswc[l=5]": [],

"wawa": [],

}

for i, strategy in enumerate(results.keys()):

labels = yhats[i] # load aggregated labels

results[strategy].append(accuracy(labels, gt)) # compute accuracy

results["NS"] = results["naivesoft"] # rename naivesoft to NS

results.pop("naivesoft")

# Styling the results

results = pd.DataFrame(results, index=["AccTrain"])

results.columns = map(str.upper, results.columns)

results = results.style.set_table_styles(

[dict(selector="th", props=[("text-align", "center")])]

)

results.set_properties(**{"text-align": "center"})

results = results.format(precision=3)

results

[6]:

| MV | GLAD | DS | DSWC[L=5] | WAWA | NS | |

|---|---|---|---|---|---|---|

| AccTrain | 0.755 | 0.775 | 0.890 | 0.775 | 0.785 | 0.760 |

The difference in performance shown result from the random tie-breaks generated.